As a marketer, you’re always looking for ways to improve your email marketing campaigns and get better results. One way to do this is by A/B testing your emails. A/B testing, also known as split testing, is a method of testing two versions of an email to see which one performs better. In this blog post, we’ll cover everything you need to know about A/B testing your Convertkit campaigns, including what to test, how to test, and how to interpret the results.

- What is A/B Testing?

- What to Test in Your Convertkit Campaigns

- How to Set Up A/B Testing in Convertkit

- Interpreting the Results

Try ConvertKit

ConvertKit is the only email marketing platform we use, and will ever use.

ConvertKit is our #1 recommended email marketing platform because it has been built with care to the exact needs of creators building online businesses. Their user experience is very user-friendly. And segmenting our subscribers into focused groups so that we can deliver content specific to their needs has never been easier. We’re all-in on ConvertKit.

What is A/B Testing?

A/B testing involves creating two versions of an email campaign and sending them to different segments of your audience. The two versions should be identical except for one variable that you want to test. This variable could be the subject line, the call-to-action, the image, or any other element of the email. By sending these two versions to different segments of your audience, you can compare the performance of each version and determine which one is more effective.

What to Test in Your Convertkit Campaigns

There are several elements of your email campaigns that you can test using A/B testing. Here are a few ideas to get you started:

- Subject lines – Test different subject lines to see which one results in a higher open rate.

- Call-to-action – Test different call-to-action buttons or links to see which one results in a higher click-through rate.

- Personalization – Test personalized emails against non-personalized emails to see if personalization makes a difference.

- Send time – Test different send times to see if there is a particular time of day or day of the week that results in higher engagement.

- Length of email – Test shorter vs. longer emails to see which one results in higher engagement.

How to Set Up A/B Testing in Convertkit

Setting up A/B testing in Convertkit is a straightforward process. Here are the steps:

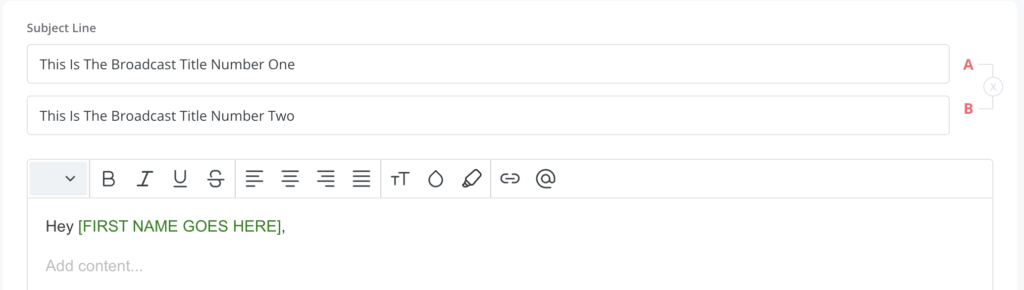

- Create two versions of your email campaign, with only one variable that is different.

- Go to the Broadcasts tab in Convertkit and create a new broadcast.

- Select the segment of your audience that you want to send the A/B test to.

- Choose “Split Test” as your broadcast type.

- Choose which variable you want to test, and select the two versions of your email campaign.

- Set the test duration and the winning metric (open rate or click-through rate).

- Schedule your A/B test to be sent at the desired time.

Interpreting the Results

When it comes to interpreting the results of your A/B test in Convertkit, it’s essential to go beyond just looking at the open rate and click-through rate. While these metrics provide valuable insights, a more comprehensive analysis can yield a deeper understanding of the effectiveness of your A/B test and guide your future email marketing strategies. Here’s how you can dive deeper into interpreting the results:

Statistical Significance:

Before drawing conclusions, ensure that the differences in performance between the A and B versions are statistically significant. This helps you determine whether the observed variations are due to chance or if they genuinely represent a meaningful difference in response rates. Utilize statistical tools or calculators to assess significance.

Secondary Metrics:

While open rates and click-through rates are primary indicators, consider analyzing secondary metrics such as conversion rates, bounce rates, and unsubscribe rates. These metrics offer a more comprehensive view of how each version influences recipient behavior beyond the initial interaction.

Segment Analysis:

Break down the results by audience segments to identify if certain segments respond differently to one version over the other. This insight can guide future segmentation strategies, allowing you to tailor your content more effectively based on audience preferences and behaviors.

Engagement Patterns:

Examine engagement patterns beyond the initial click-through. Analyze how recipients interact with your content after clicking through, such as time spent on the landing page, engagement with other linked content, or completion of desired actions (like filling out a form or making a purchase).

Qualitative Feedback:

Collect qualitative feedback from recipients to understand their perceptions and preferences. Surveys, polls, or direct communication can provide insights into why one version may have resonated more effectively, helping you refine your future messaging.

Iterative Testing:

Consider running iterative tests to refine your findings. Once you’ve identified a winning version, continue testing variations of that version to optimize your campaigns continuously. Small tweaks can lead to incremental improvements over time.

Long-term Impact:

Assess the long-term impact of your A/B test. While immediate results are important, monitor how the changes influenced recipient behavior over an extended period. For instance, did one version lead to more sustained engagement or increased customer retention?

Integration with Overall Strategy:

Place the A/B test results within the context of your broader marketing goals and strategies. Consider how the insights align with your brand’s messaging, value proposition, and overarching objectives.

Comparative Analysis:

Compare the A/B test results with historical data or benchmarks from previous campaigns. This comparative analysis can help you gauge the relative success of the tested elements and identify trends or patterns that may guide future optimizations.

By taking a comprehensive approach to interpreting the results of your A/B test, you’ll gain a richer understanding of the impact of your email campaign variations. This depth of insight will empower you to make more informed decisions, refine your email marketing strategies, and ultimately drive better results in future campaigns.

Conclusion

A/B testing your Convertkit campaigns can help you improve the effectiveness of your email marketing and get better results. By testing different variables in your emails, you can determine what resonates best with your audience. Use the tips and tricks provided in this blog post to get started with A/B testing, and don’t forget to check out our other blog posts in this Convertkit guide for more helpful tips.

Email List Building Video Workshop

Watch this free step by step video workshop by Isa Adney, Business Owner and Trainer at ConvertKit.

Leave a Reply